Training

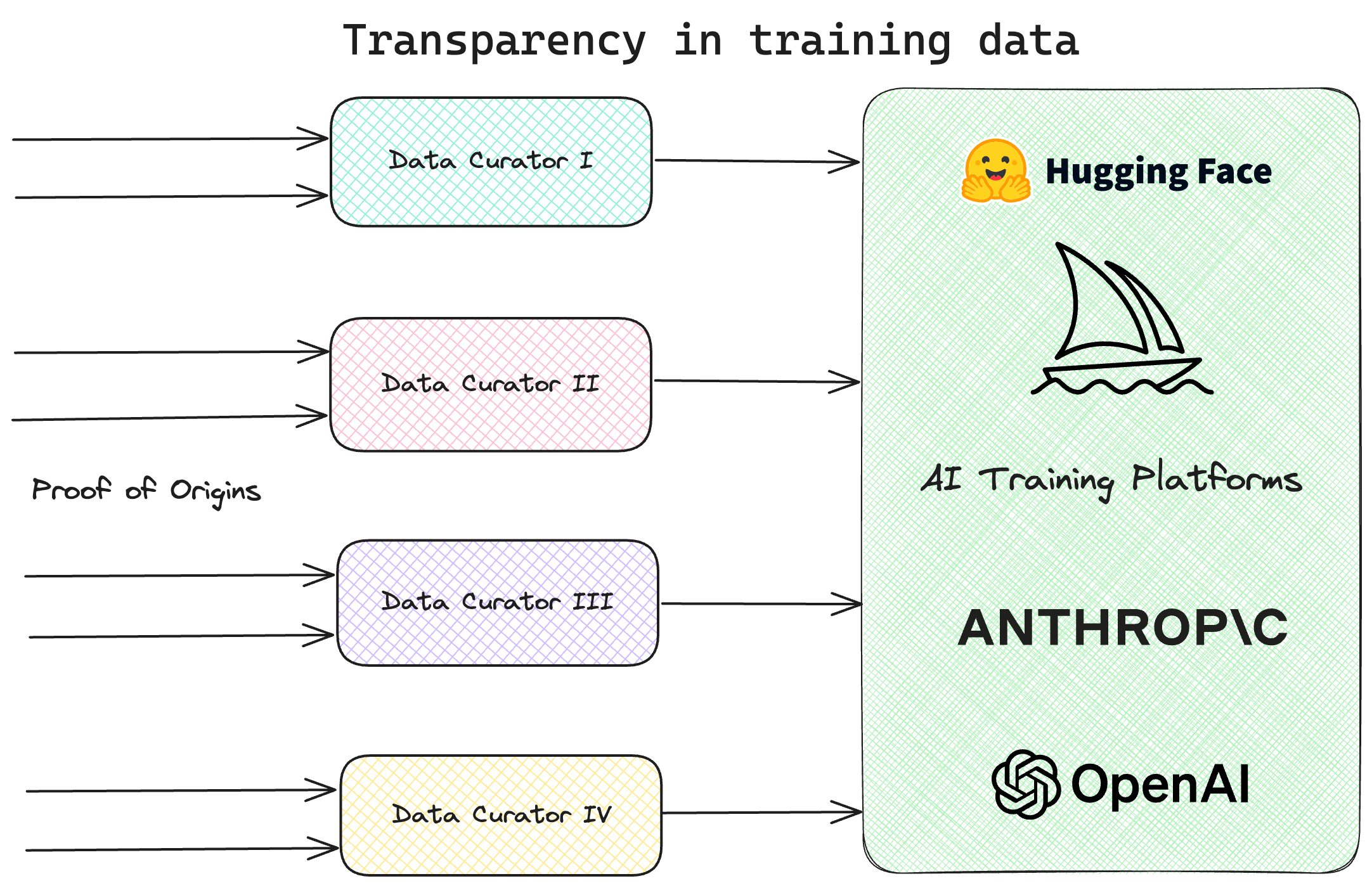

Maya unlocks transparency in AI training by providing verifiable proofs about the origins of training data and ensuring that it is legally and ethically sourced.

Transparency is critical for AI training systems to comply with regulations such as the EU AI Act and to guarantee the quality and integrity of AI models. With Maya, data curators can now prove the provenance of training data to AI training platforms, ensuring that the data used in the training process is not poisoned or tampered with, and includes information on the proportion of synthetic (AI-generated) data used.

Core Benefits

- Provide Verifiable Proof: Data curators can offer verifiable proof of training data, ensuring confidence in the origins of training data.

- Build Credibility: By using Maya, data curators can build credibility and trust with AI training platforms, ensuring only data with user consent is used and that it is not sourced from a ��“do not train” database.

- Ethical and Legal Compliance: AI training platforms can verify that the training data is ethically and legally sourced, ensuring compliance with regulations such as the EU AI Act.

- Prevent Malicious Behavior: Maya ensures that malicious training data is not used, preventing potential harmful behavior of AI models and helping to identify and understand inherent model biases.

Integration

You can integrate Maya’s SDK (currently in development) into your data collection & processing pipelines to ensure verifiability of final data from the source through subsequent processing steps such as normalization, reduction and sanitization.

Get in touch with the team for more details.

Note: This page is in progress.